Compute

“Compute refers to applications and workloads that require a great deal of computation, necessitating sufficient resources to handle these computation demands in an efficient manner.”

Compute-intensive applications stand in contrast to data-intensive applications, which typically handle large volumes of data and as a result place a greater demand on input/output and data manipulation tasks.

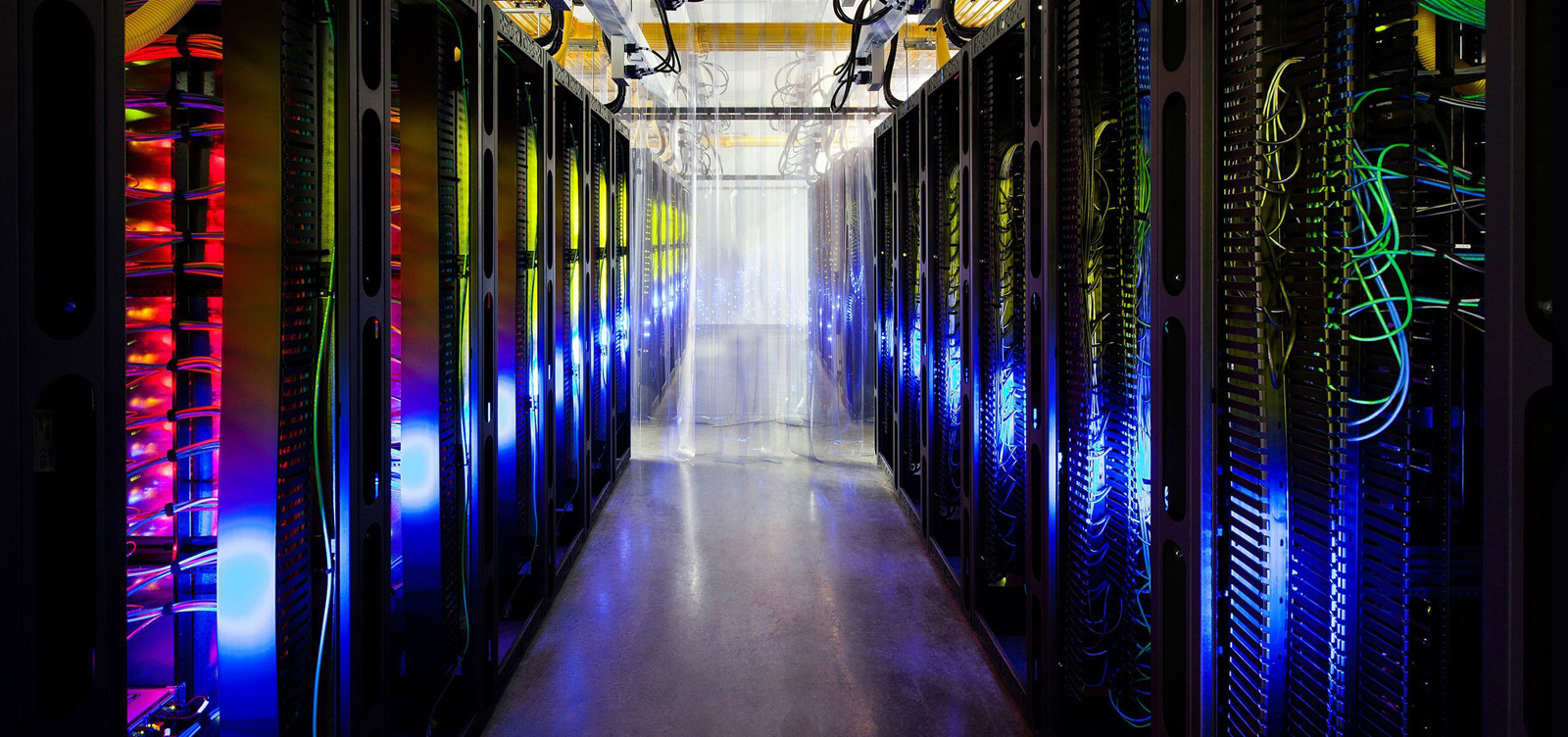

The term compute is frequently encountered in the server and data center space as well as in cloud computing, where infrastructure and resources can be ideally constructed to efficiently handle compute-intensive applications that require large amounts of compute power for extended periods of time.

Compute is also sometimes referred to as Big Compute as a play-off of the popular Big Data term.

Big Data

Big data are collections of data too cumbersome to be adequately managed by traditional data processing means. Organizations in the petrochemical, oil and gas, finance, public safety, medical research, engineering, and academic fields are often the most prominent users of deep analysis of this voluminous data. The challenges faced by these entities extend beyond the capture, sharing, storage, privacy, and transfer to the complexities of enabling its visualization in order to make this data more useful.

The collection and dissemination of big data provide corporations with the ability to analyze trends, dissect user patterns, and incorporate predictive analytics to further their abilities to meet client demands, streamline processes, and stay ahead of the competition.

The ever-increasing deployment of data capture devices such as cameras, smartphones, radio frequency identification (RFID), remote sensors, software logs, and other smart devices can stress the abilities of organizations to manage and analyze big data.

Contact the NordStar Group and explore our portfolio of solutions for managing big data.

Clusters

The compute node is the heart of your high-performance cluster. The application(s) to be run and the size of your cluster will be the determining factor in the type of node, the number of nodes, and the path to future expansion. HPC systems are usually referred to as “Clusters”. HPC systems are used across a number of industries from SMB companies to worldwide conglomerates.

A generally used definition of HPC is “a branch of computer science that concentrates on developing supercomputers and the software to run on supercomputers”. HPC involves aggregating computer power to deliver much higher performance than can be achieved by a single workstation or desktop.

Multiple computers working in tandem provide unified processing.

Clusters can be moved, added to or changed readily.

Clusters have built in redundancy so processing continues if components fail.

Clusters are typically comprised of Commercial Off The Shelf (COTS) units.

HADOOP

HADOOP is an open-source, Java-based software programming framework that supports the processing and storage of extremely large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation and delivers high-speed processing with the ability to run nearly limitless concurrent tasks delivering a highly desirable cost-to-performance ratio.

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high availability, the library itself is designed to detect and handle failures at the application layer, delivering a highly available service on top of a cluster of computers, each of which may be prone to failures.

Companies seeking to create greater value from their HADOOP implementation should consult with a NordStar representative and explore the options available.

Cloudera

Cloudera is fundamentally changing enterprise data management by offering the first unified Platform for Big Data: The Enterprise Data Hub. Cloudera provides enterprises one place to process, store and analyze all their data, allowing them to expanding the value of existing investments while enabling new ways to derive exponential value from their data.

Founded in 2008, Cloudera was the first and current leading provider and supporter of Apache Hadoop for the enterprise. Cloudera also provides software for business critical data challenges including storage, access, management, analysis, security, and search.

Customer success is Cloudera’s definitive priority. We’ve enabled long-term, successful deployments for hundreds of customers, with petabytes of data collectively under management, across diverse industries. Click here for additional information on Cloudera products or contact Houston partner NordStar for an immediate response.

Hortonworks

The Hortonworks connected data suite family of solutions delivers end-to-end capabilities for data-in-motion and data-at-rest. Hortonworks DataFlow collects, curates, analyzes, and delivers real-time data from the Internet of Anything (IoAT) – devices, sensors clickstreams, log files, and more.

Hortonworks Data Platform enables the creation of a secure enterprise data lake and delivers the analytics you need to innovate fast and power real-time business insights. This HADOOP data platform implementation for your data center provides scalable, fault-tolerant, and cost-efficient big data storage and processing capabilities with powerful tools for governance and security.

Together, Houston-based NordStar Group, Hortonworks DataFlow, and Hortonworks Data Platform empower the deployment of modern data applications. For more information on Hortonworks visit here.